HADOOPTALKS - Integrate LVM with HADOOP for elastic storage

Knowing multiple technologies is not a big thing. You need to know how to integrate these technologies with each other to solve some real-use cases. Here I’m trying to solve one real challenge which we mostly facing in the Big Data world or in the HADOOP setup.

So here is what I’m going to perform practically…

In a Hadoop cluster, find how to provide storage elastically (dynamically) to the cluster so you can increase/decrease size when you need further?

So let’s first talk about LVM(Logical Volume Manager)…

LVM is a tool for logical volume management which includes allocating disks, striping, mirroring, and resizing logical volumes.

With LVM, a hard drive or set of hard drives is allocated to one or more physical volumes. LVM physical volumes can be placed on other block devices which might span two or more disks.

Since a physical volume cannot span over multiple drives, to span over more than one drive, create one or more physical volumes per drive.

Now let’s see how we use this in Hadoop Cluster to solve the problem statement…

I use the AWS cloud for setting up my Hadoop cluster with 1 master node and 1 slave node. In the slave node, I’m attaching one more harddisk of 8GB for performing this practical. I want to only give 4GB from my 8GB harddisk to Hadoop to store the Data.

Now we have one physical hard drive…

First step: Creating Physical Volume(PV) from the hard drive…

For this, run command…

pvcreate /dev/xvdf(disk_name)

Second Step: Creating Volume Groups(VG) from the physical volumes

For this, run command…

vgcreate vg_name PV_name

Third Step: Creating logical volumes from the volume groups and assign the logical volumes mount points…

For this, run command…

Now I need to mount this partition with any directory to use it for Hadoop slave node…

For automating LVM partition, I also created one python Script…

GitHub Link: https://github.com/gaurav-gupta-gtm/automate-lvm-partition

Now let’s configure the Hadoop Cluster…

First thing, you need to download Hadoop and JDK software in both master and slave node…

After this install these…

Run these two commands in both name node and slave node. For checking, it installs properly, run...

Now we need to edit some files in master and slave node…

- Master Node:

Make one directory using command…

mkdir /nn

Then Go to /etc/hadoop and first edit hdfs-site.xml

Now edit core-site.xml…

After this, I need to format the namenode first…

- Now come to slave node…

In slave node, we also need to edit both files…

Go to /etc/hadoop and then edit core-site.xml…

Now edit hdfs-site.xml and give directory name in which we mount partition above…

That’s it.

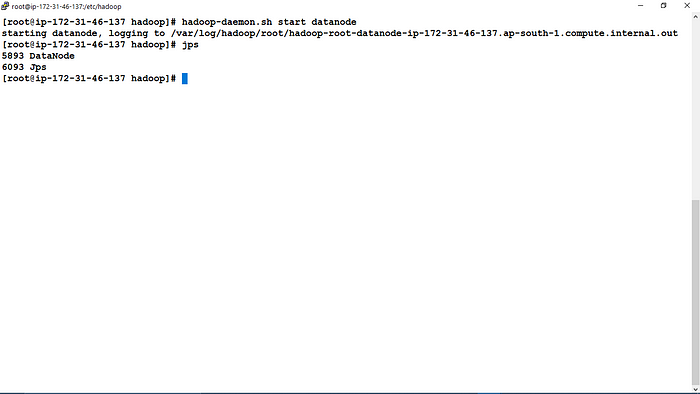

Now let’s start the Hadoop services in both Master and Slave Node…

- In slave node…

- In master node…

You can see that now my HADOOP Cluster is only able to use 4GB from my slave node.

Now the main part comes…

I want to increase my slave storage from 4GB to 6GB without formatting/recreating the partition and this elasticity (in terms of storage) only provided by LVM storage.

For extend your logical volume(LV), run…

lvextend --size +size /partition

Your partition is extended but it is not shown yet… So for resize, run this…

resize2fs /partition

Now you can see my Volume is increased dynamically…

Let’s check the master node now…

You can see from here too that now my DataNode contributes almost 6GB storage to my Cluster. 🤗